When borrowers have a question on their Square Loan, they can write into support to get them answered. However, questions that aren't actionable by an agent, or simple questions that can be answered by an FAQ article are costly because it requires a human to reply, and there's nothing the agent can do or add to the conversation (example: eligibility, decline).

In order to tackle this problem, we want to increase user engagement with the conversational assistant (ADA chatbot) to decflect non-actionable inquiries.

Project Details

Role: Sole content designer, project manager

Deliverables: ADA Chatbot flow

Team: Edgar L.(ADA Chatbot DRI), Caitlyn M. (CS PMO), Nathaniel V. (Collections DRI), Gavin M. (Legal)

Timeline: 1 month

Problem

After the user types in an initial inquiry, continuation for Square loans is very low compared to other products at Square. After a seller starts an initial session with ADA by typing in a topic — they aren’t contining with the flow and clicking on a correlating subtopic.

The goal of updating ADA chatbot is to both increase engagement while making sure that copy changes don't increase the amount of support cases.

Research

Given that this project had limited time and resources, I primarily used secondary research like combining "macro data" with a "content analysis" to understand why engagement with the chatbot is so low for Square Loans.

Methodology

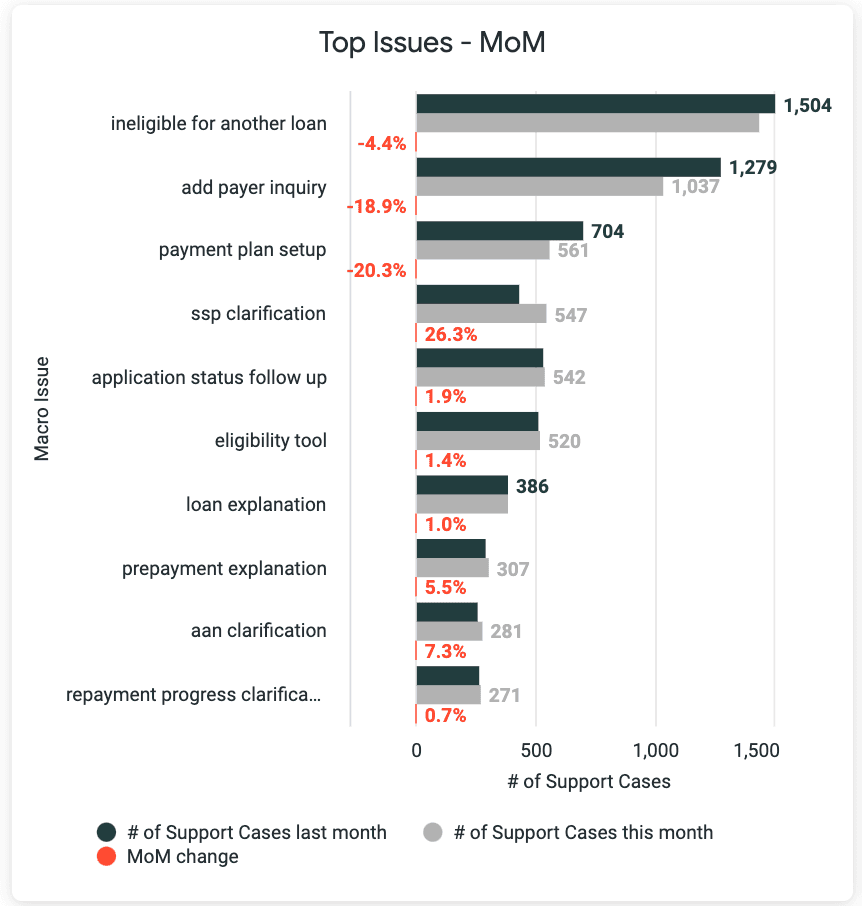

1. View macro data via looker to identify the top 10 topics that customers are writing in about.

2. Perform a content audit of other products at Square.

Content Alignment

Given that the users aren't continuing with ADA chatbot, I wanted to find out if the list of sub-topics presented in the chatbot are aligned with the inquiries that users had. Again, given the limited time and resources, I looked at historical macro data that tags a SalesForce case with the core issue of a inquiry. If I had more resources, I would do some additional primary qualitative research, and schedule some 1:1 interviews to better understand user behaviors when they needed to contact support.

Research Insight

After comparing macro data where we've identified the top 10 issues with the existing subtopics in the chatbot— it was found that that many of our existing subtopics were not aligned with what customers are writing into support about.

Content Navigation

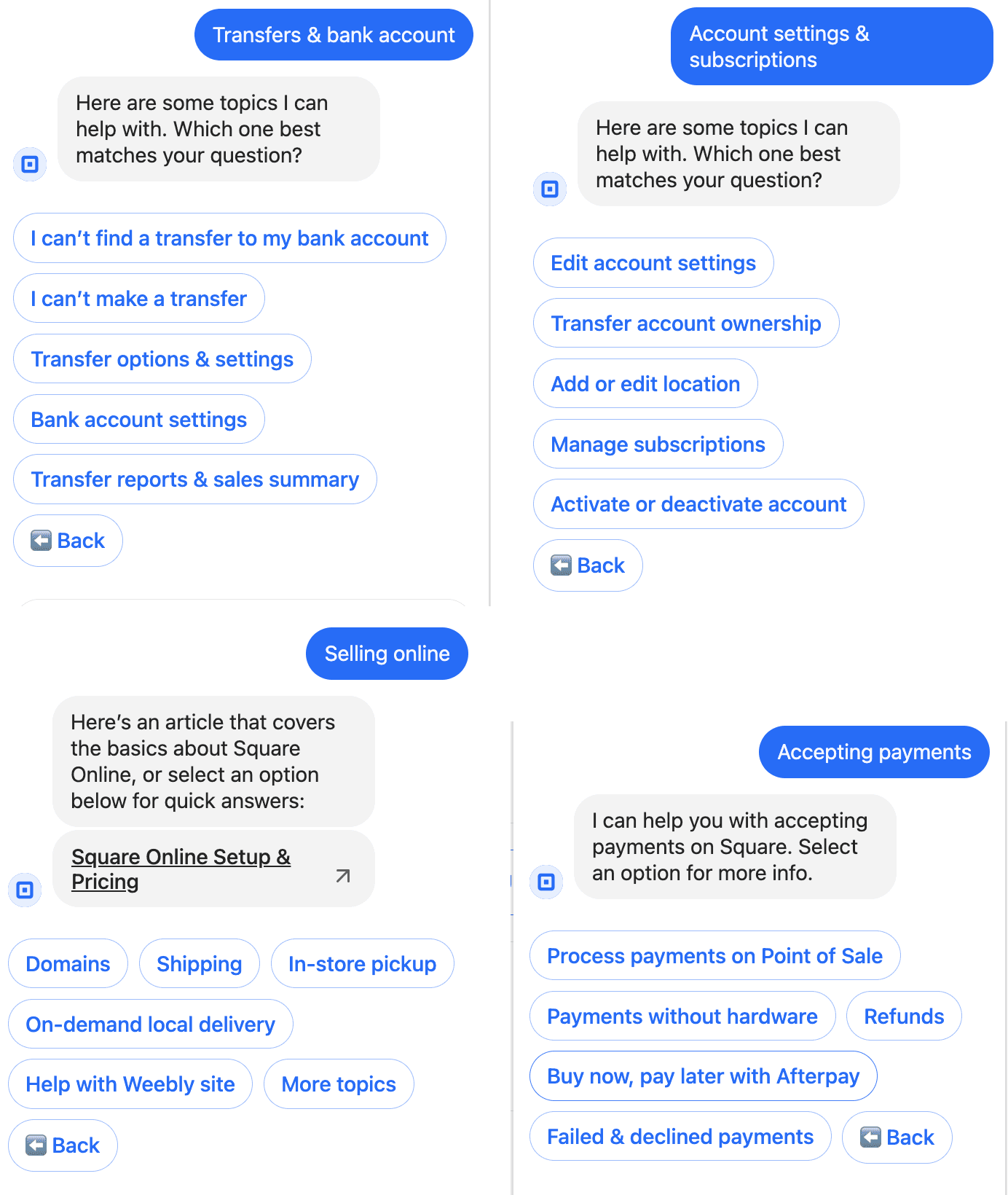

To get an idea of a messaging flow that has high engagement, I partnered with Edgar L. on the ADA chatbot team to look at other Square products with higher engagement. We wanted to find what what made them better, so we can replicate it with Square Loans.

Research Insight

• After conducting a content audit of other products at Square, we found that (1) most other products had more than 4 subtopics, and (2) their subtopics were relevent to the questions that users were likely to have.

• The subtopics for Square Loans were vague and nested, while other products had the the sub-topics front and center accessible to the user.

Content Ideation

After identifying some of these insight, I shared the insights with relevant stakeholders (ADA chatbot DRI, CS, Serving, Collections) to look at the problem. Stakeholders are able to contribute by voicing out their perspectives based on their different function/background in the business.

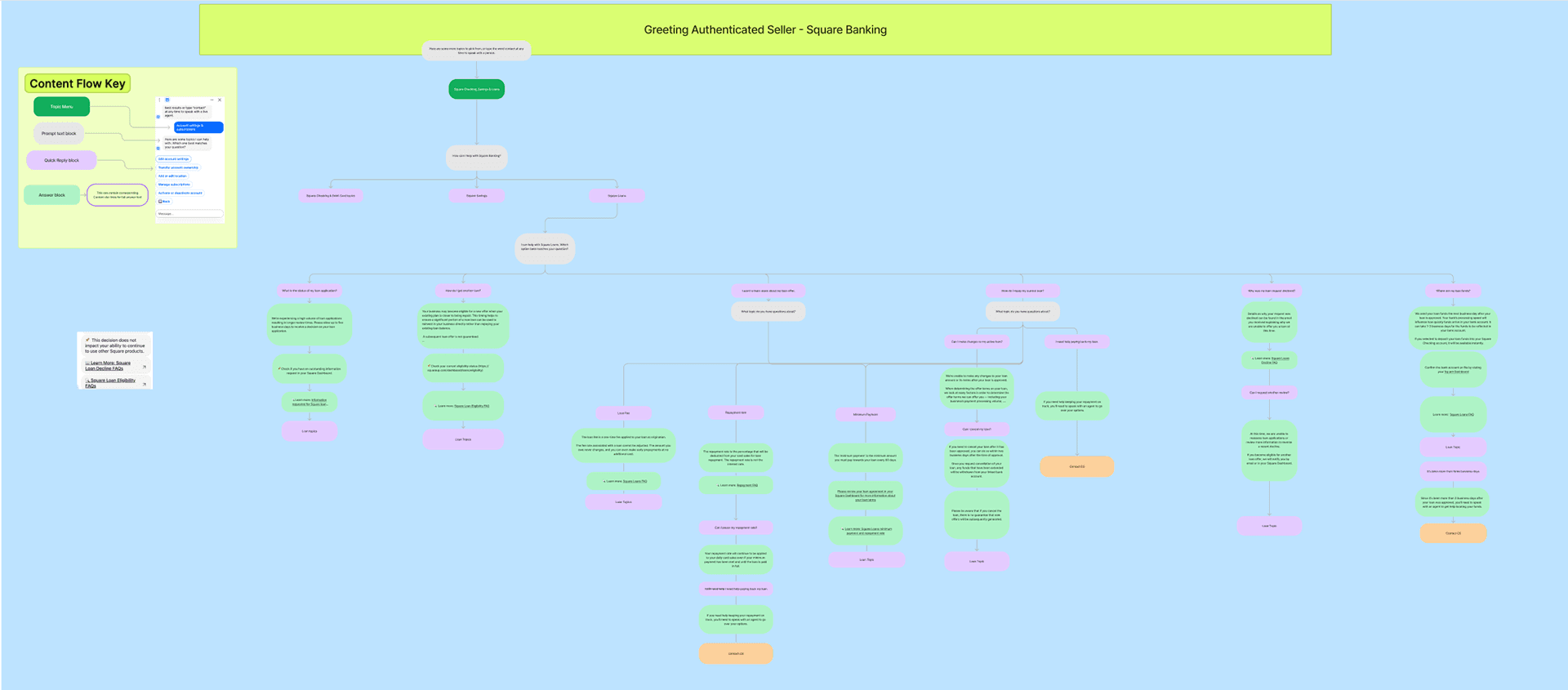

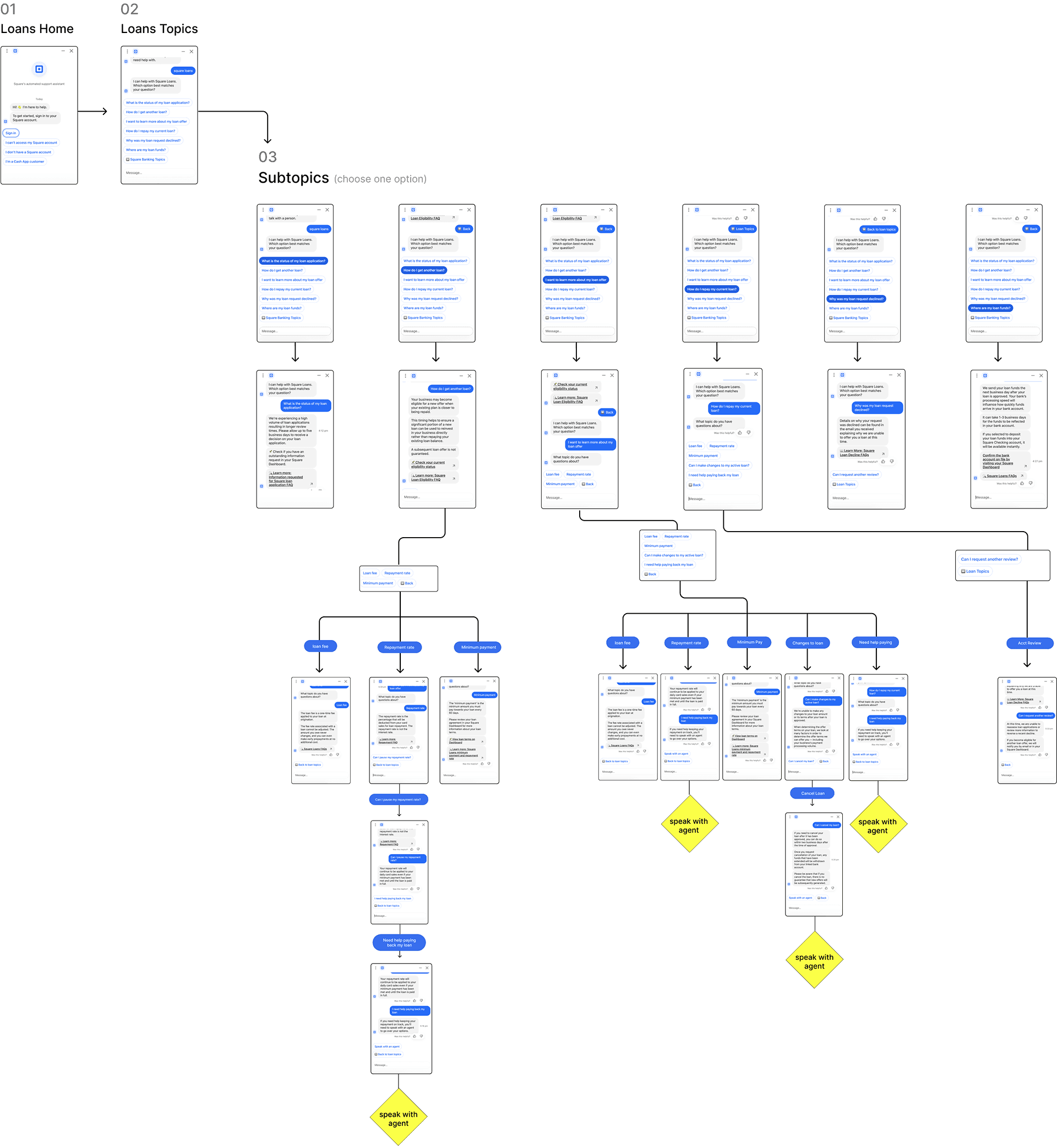

Wireframing

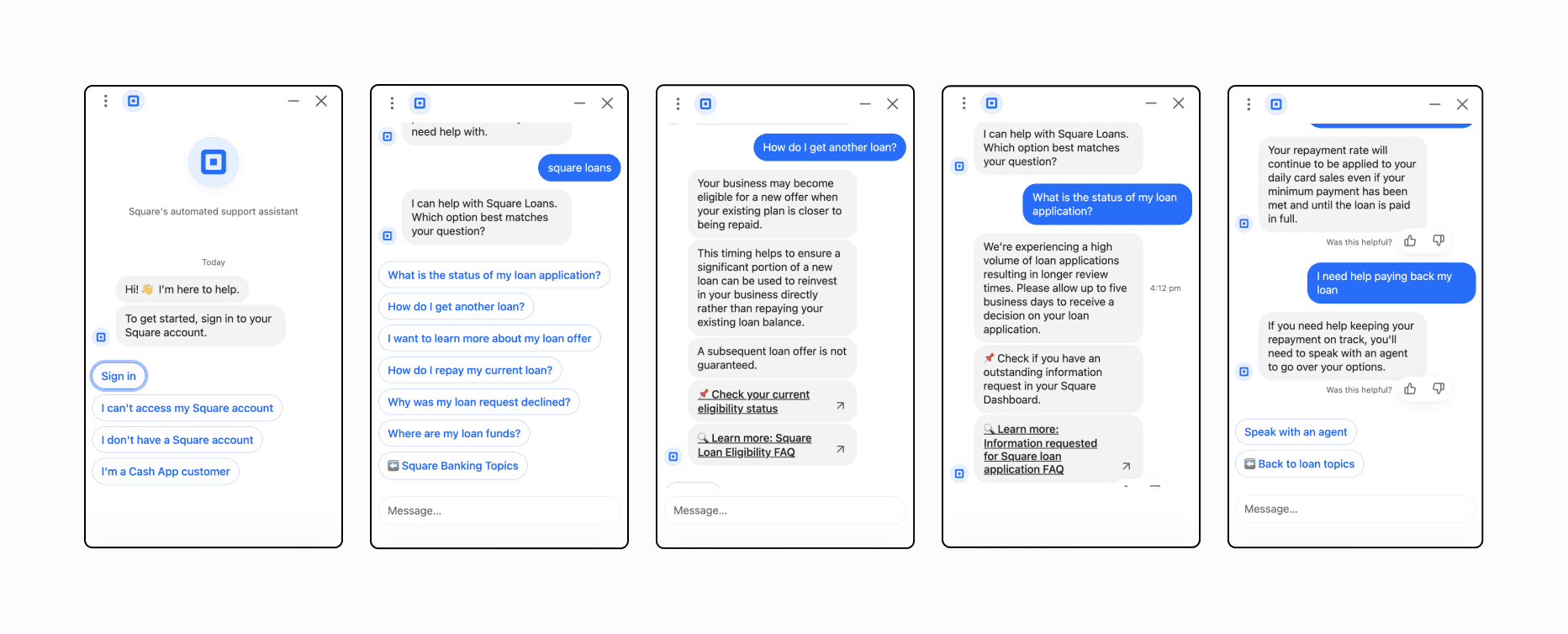

I created a low fidelity wireframe to mock up how the content would flow. We took the research insights and decided align the sub-topics presented with the top 7 inquiry drivers that we saw in the macro data. Additionally, to make our messaging flow consistent with the rest of Square — instead of nesting the sub-topics into vague overarching topics, we removed the first layer of the content hierarchy and replaced it with sub-topics that would most closely match what the actual question users would write in with.

Implementation

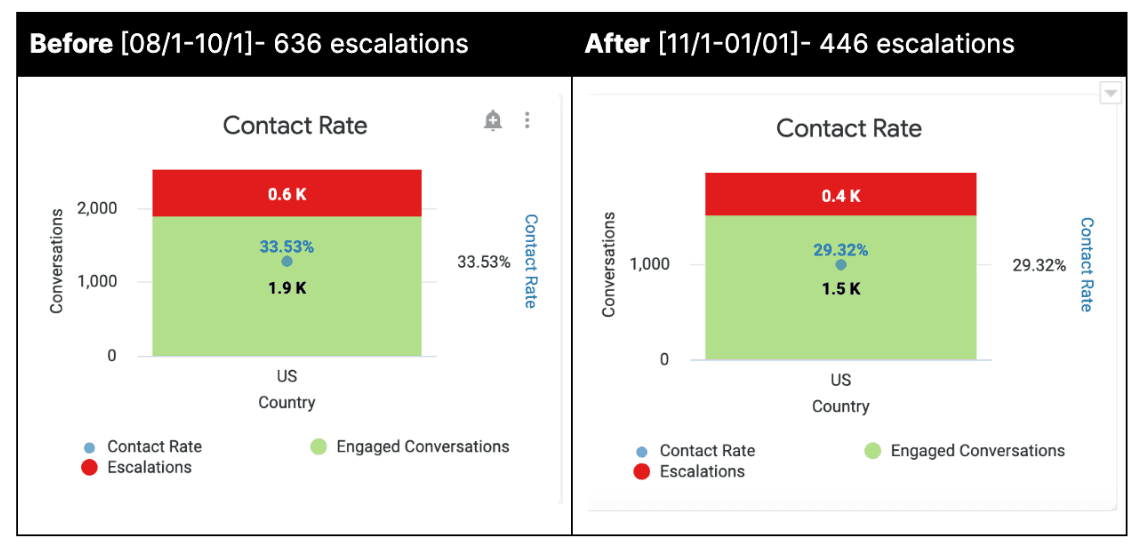

I worked with the ADA chatbot team for implementation of the chatbot flow. While we wanted to conduct an A/B test, given some resource and functionality restraints, we decided to launch it to the entire population and then compare results between 60 days previous to updates & 60 days after updates.

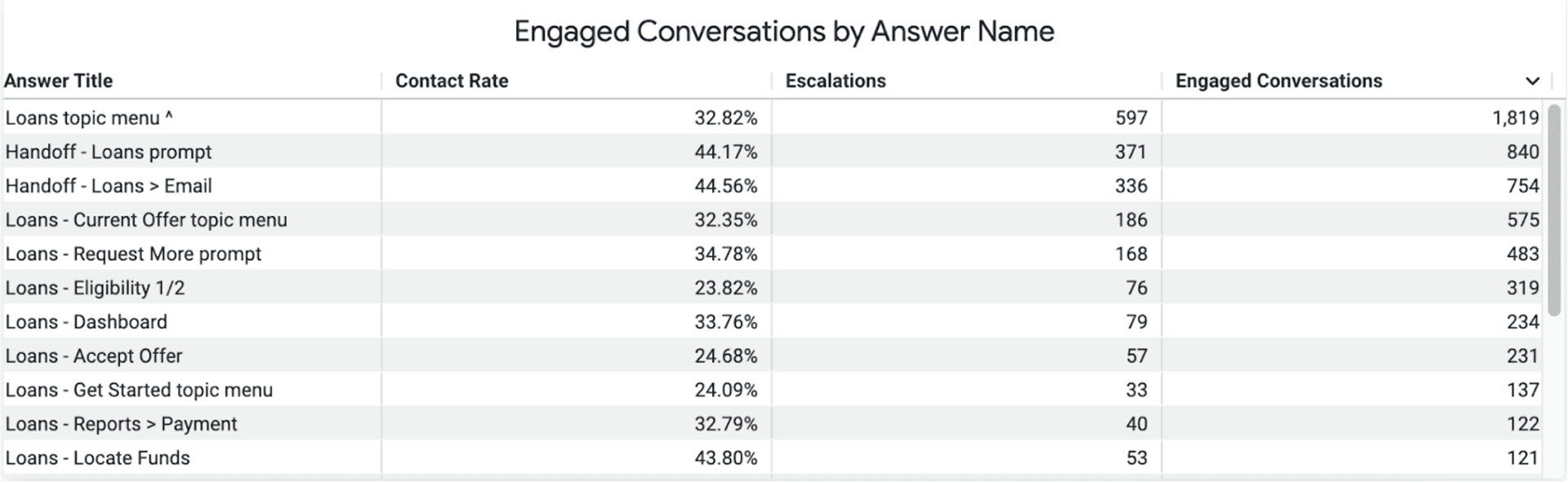

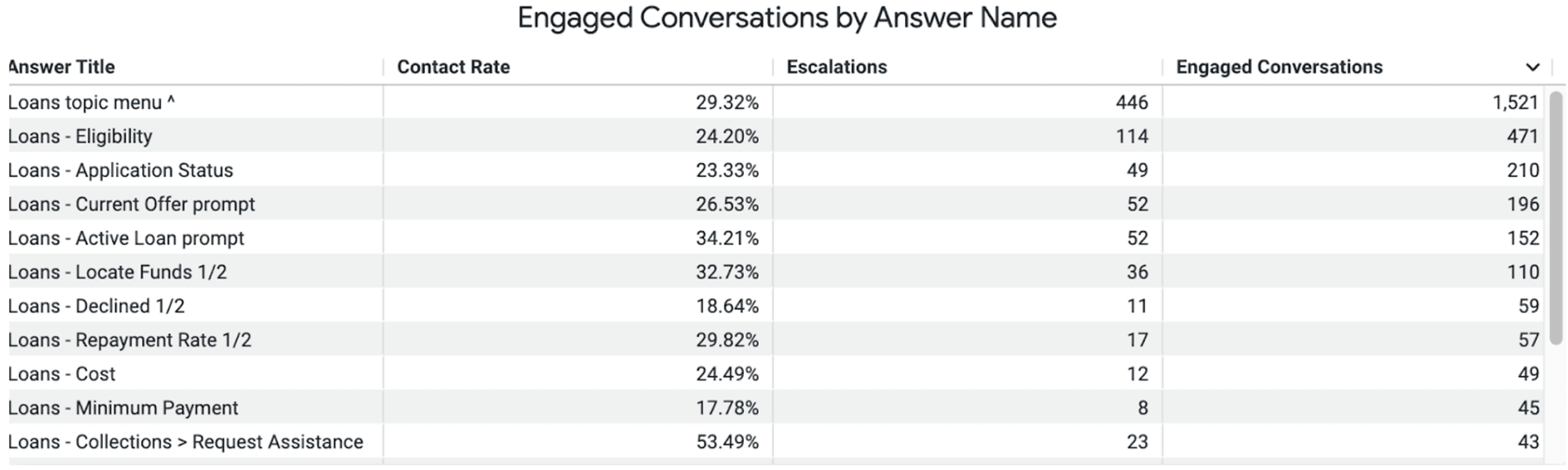

Test Results

We saw an increase in engagement with ADA chatbot as well as a decrease in overall contact rate (queue deflection) after comparing the data of “60 days before updates were made” with “60 days after updates were made”. This means that there was an overall positive effect.

Engagement Analysis

There is an overall 24.63% increase in engagement after changes to ADA chatbot were made.

We see an overall increased amount of engagement after implementation of updates with more people clicking and engaging in conversation about the issue the customer is experiencing. Contributing factors may be that it is easier to find their correlating issue after ADA chatbot updates. Previously the customer had to click through additional prompts before seeing sub-issues.

Example: previous to updates Loans Topic > Managing your Square Loan > Cost, after updates Loans Topic > Cost.

60 days After Changes

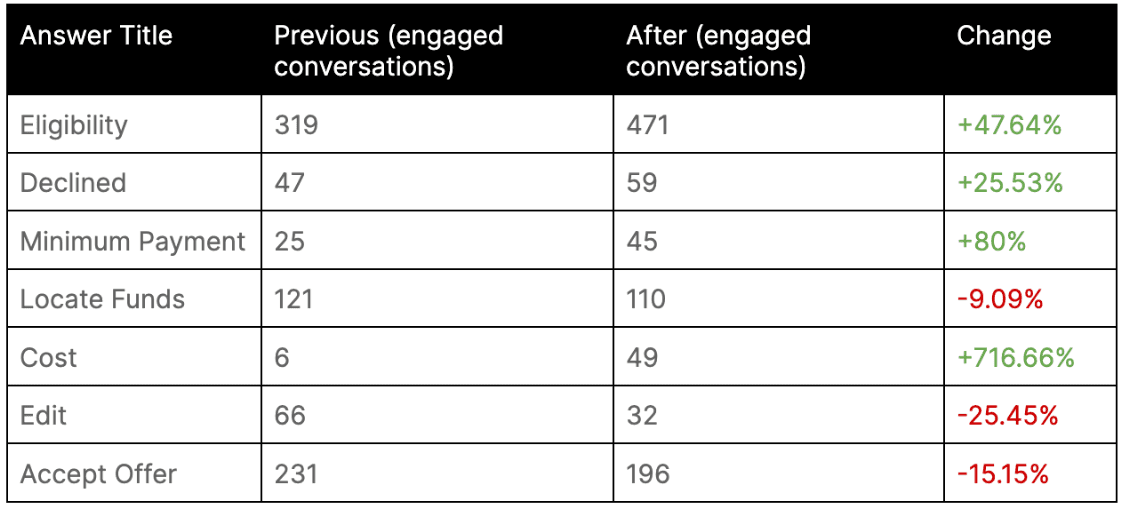

Granular Breakdown

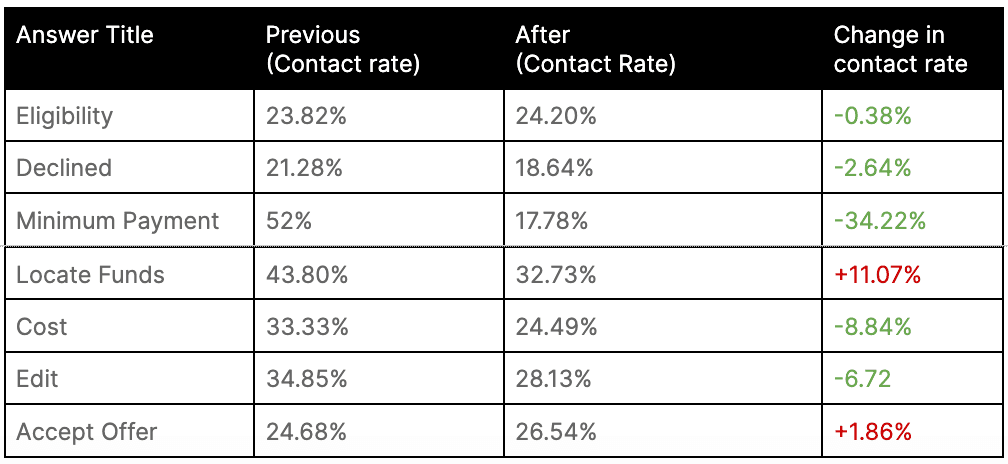

Although the amount of people in the funnel is similar ~1200 people in both 60 days previous to change and 60 days after changes — we see that more people are selecting a subtopic and engaging in conversation vs. previously. It’s important to note that changes are not all 1:1, however many of the topics we’ve kept and can be compared 1:1 — I’ve added all sub-topics that are comparable in the table on the right.

Queue Deflection Analysis

There is a 4.21% overall drop in contact rate* after ADA chatbot changes. This means that more customer inquiries were resolved by ADA chatbot than previous to updates — ultimately less cases needed a live agent to resolve. Contributing factors to this positive change may be due to the addition of “top inquiry drivers” as subtopics, such as —application timeframe and repayment explanation.

Granular Queue Deflection

©Janet Ko 2024